From techcrunch.com

Millions of people communicate using sign language, but so far projects to capture its complex gestures and translate them to verbal speech have had limited success. A new advance in real-time hand tracking from Google’s AI labs, however, could be the breakthrough some have been waiting for.

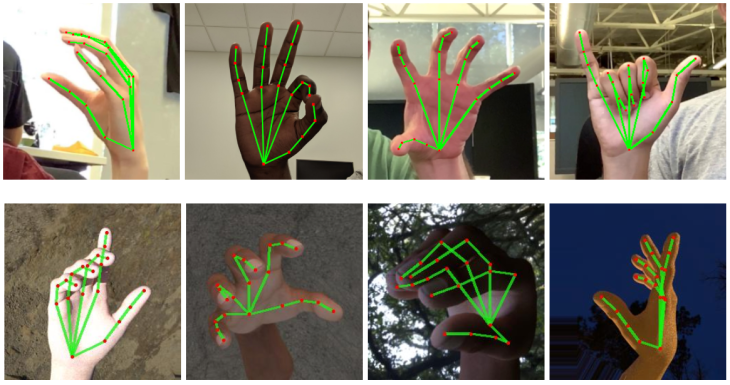

The new technique uses a few clever shortcuts and of course the increasing general efficiency of machine learning systems to produce, in real time, a highly accurate map of the hand and all its fingers, using nothing but a smartphone and its camera.