From umbrella.cisco.com

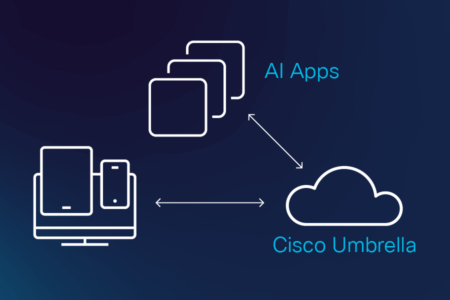

How can information security leaders ensure that employee usage of artificial intelligence tools to help increase organizational productivity is not putting sensitive company data at risk of leakage? A look at most LinkedIn or news feeds will make most of us wonder which AI apps are safe to use, which aren’t, which of them should be blocked, and which should be limited or restricted.

As reported by Mashable, Samsung employees inadvertently leaked confidential company information to ChatGPT1 on at least three separate occasions in April, prompting the company initially to restrict the length of employee ChatGPT prompts to a kilobyte. Then, they decided on May 1st to temporarily ban employee use of generative AI tools on company-owned devices, as well as on non-company-owned devices running on internal networks.