From securityonline.info

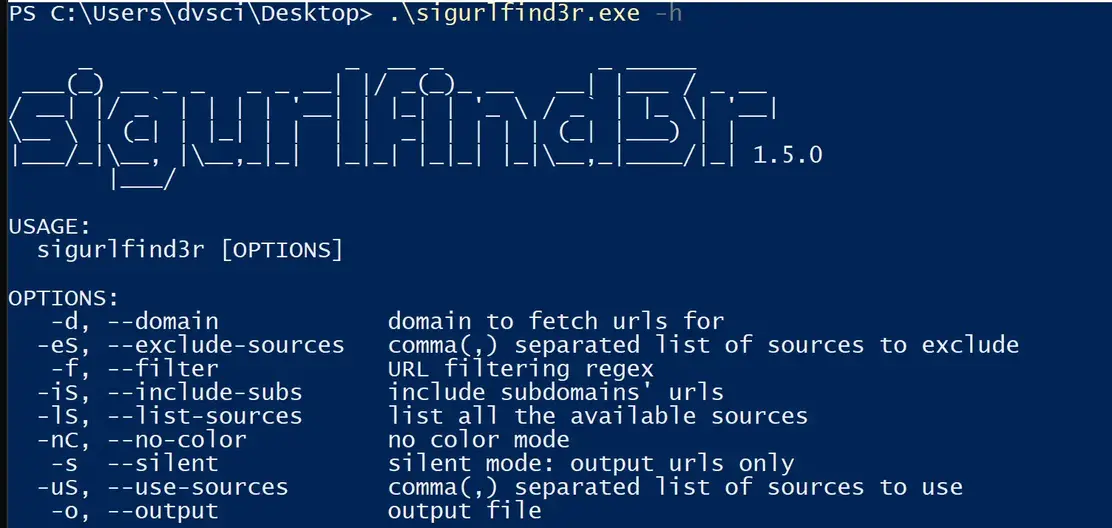

A passive reconnaissance tool for known URLs discovery – it gathers a list of URLs passively using various online sources.

Features

- Collect known URLs:

- Fetches from AlienVault’s OTX, Common Crawl, URLScan, Github, and the Wayback Machine.

- Fetches disallowed paths from robots.txt found on your target domain and snapshotted by the Wayback Machine.

- Reduce noise:

- Regex filter URLs.

- Removes duplicate pages in the sense of URL patterns that are probably repetitive and points to the same web template.

- Output to stdout for piping or save to file.