From thehackernews.com

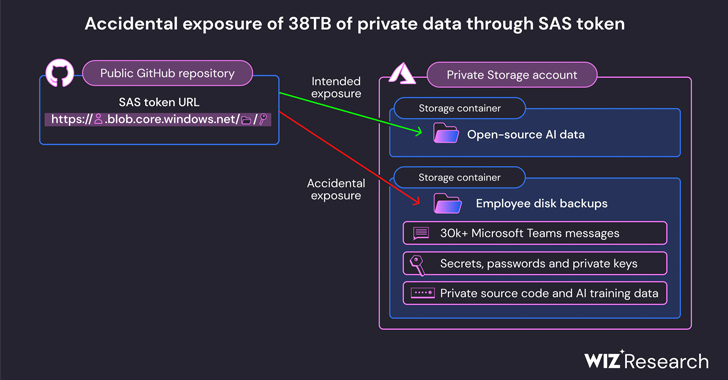

Microsoft on Monday said it took steps to correct a glaring security gaffe that led to the exposure of 38 terabytes of private data.

The leak was discovered on the company’s AI GitHub repository and is said to have been inadvertently made public when publishing a bucket of open-source training data, Wiz said. It also included a disk backup of two former employees’ workstations containing secrets, keys, passwords, and over 30,000 internal Teams messages.

The repository, named “robust-models-transfer,” is no longer accessible. Prior to its takedown, it featured source code and machine learning models pertaining to a 2020 research paper titled “Do Adversarially Robust ImageNet Models Transfer Better?”