From helpnetsecurity.com

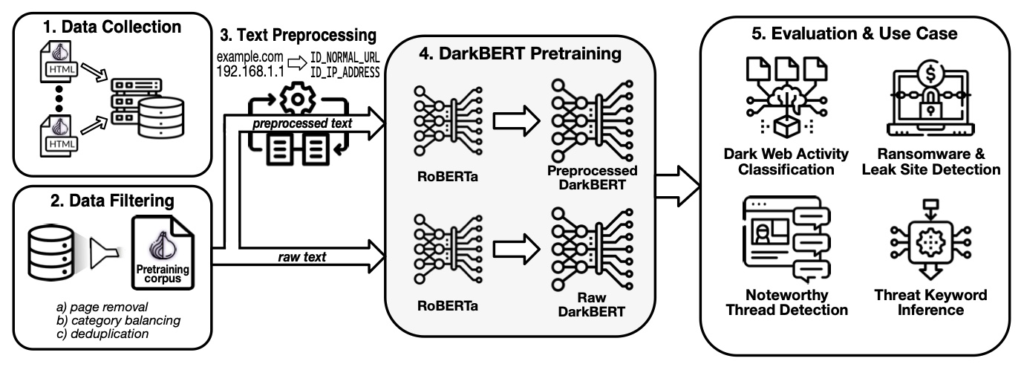

Researchers have developed DarkBERT, a language model pretrained on dark web data, to help cybersecurity pros extract cyber threat intelligence (CTI) from the Internet’s virtual underbelly.

DarkBERT: A language model for the dark web

For quite a while now, researchers and cybersecurity experts have been leveraging natural language processing (NLP) to better understand and deal with the threat landscape. NLP tools have become an integral part of CTI research.

The dark web, known as a “playground” of individuals involved in illegal activities, poses distinct challenges when it comes to extracting and analyzing CTI at scale.

A team of researchers from Korea Advanced Institute of Science and Technology (KAIST) and data intelligence company S2W has decided to test whether a custom-trained language model could be useful, so they came up with DarkBERT, which is pretrained on dark web data (i.e., the specific language used in that domain).